(Displayed in a random order every time)

-

Sharon Goldwater (Edinburgh)

Title: Modelling changes in infant speech perception: the role of phonetic categories

Abstract: During the first year of life, infants’ speech perception changes. As they learn about their native language, their ability to discriminate non-native sound contrasts declines. For example, [r] and [l] are phonemically contrastive in English, but not in Japanese, and although six-month-olds learning either language are able to discriminate [r]/[l], Japanese-learning infants lose this ability by about 12 months. These effects have typically been explained as a result of infants learning phonetic categories (i.e., perception is tuned to optimize category identification and/or discrimination), and have been taken as evidence that infants learn adult-like phonetic categories very early. However, in this talk I will present evidence that such a conclusion is premature. By developing computational models that learn from real (non-idealized) continuous speech input, and testing the models on an analog of infant discrimination tasks, we show that classic behavioral results can be simulated without having learned adult-like categories, or indeed any categorical representations at all. I will discuss the implications for future modelling work and for our understanding of perceptual development in infancy.

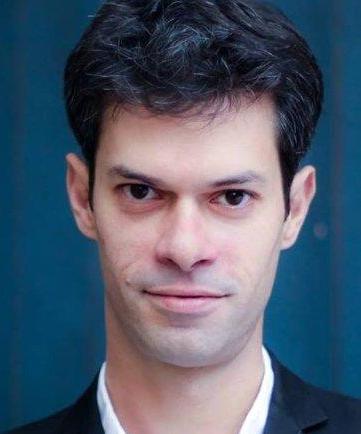

Sharon Goldwater is a Reader (similar to a US Associate Professor) in the Institute for Language, Cognition and Computation at the University of Edinburgh’s School of Informatics. She received her PhD in 2007 from Brown University, supervised by Mark Johnson, and spent two years as a postdoctoral researcher at Stanford University before moving to Edinburgh. Her research interests include unsupervised learning for natural language processing, computer modelling of language acquisition in children, and computational studies of language use. Dr. Goldwater holds a Scholar Award from the James S McDonnell Foundation for her work on “Understanding synergies in language acquisition through computational modelling" and is the 2016 recipient of the Roger Needham Award from the British Computer Society for “distinguished research contribution in computer science by a UK-based researcher who has completed up to 10 years of post-doctoral research." Dr. Goldwater has sat on the editorial boards of several journals, including Computational Linguistics, Transactions of the Association for Computational Linguistics, and OPEN MIND: Advances in Cognitive Science (a new open-access journal). She co-chaired the 2014 Conference of the European Chapter of the Association for Computational Linguistics (EACL) and is now chair-elect of the EACL governing board. -

Géraldine Walther (Zurich) and Benoît Sagot (Inria)

Title: Morphological complexities

Abstract: Seeking ways of defining and measuring the morphological complexity of a language has been an area of interest for several decades now. But our understanding of morphological systems and their intricacies has since progressed and changed significantly, first building on insights from classic information-theoretic approaches and more recently from new findings within cognitive sciences. The first approaches to morphological complexity were mostly counting-based, using structural properties of inflectional paradigms as formal indicators (McWhorter, 2011, among others). However, several studies have shown that such approaches do not allow for principled ways of comparing the morphological complexities of languages with different morphological properties (e.g., Bonami and Henri, 2010). A decade ago, information-theoretic approaches were introduced, whose aim it was to take into account system-wide properties of inflectional systems (Blevins 2013). While some approaches chose to assess the overall informational content of morphological descriptions (Sagot and Walther, 2011), others studied the predictability of inflected forms based on other forms (Ackerman et al. 2009). Although these approaches are not incompatible – as we will discuss – we will also illustrate ways in which they are all subject to a number of limitations, some fatal (Sagot, 2018). Building on the newer information-theoretic approaches, more recent work has extended the question of purely formal morphological complexity to issues involving speaker-related complexity. Here, interest has shifted towards complexity in morphological processing and learning (Ackerman and Malouf, 2013). This work, which focuses on the relation between paradigm entropy and cognitive cost, has also been successfully complemented by experimental approaches that highlight how morphological systems are not learned and processed in isolation. They participate in an intricate linguistic system, where subsystem interactions are learned and drawn upon by speakers (Filipović Ðurđević and Milin, 2018). In return, we will also show how these system-wide interactions can be captured formally through observable cross-dependencies between morphological and syntactic patterns that challenge the boundaries traditionally drawn between morphology and other linguistic subfields.

Géraldine Walther is a postdoctoral researcher at the Institute for Comparative Linguistics, University of Zurich, where she works on an SNF research project investigating the relationship between properties of adult language structure and child language acquisition in the Romansch variety of Tuatschin, spoken in the Grisons (Switzerland). She obtained her PhD in 2013 from the Université Paris Diderot. In her thesis, she developed a formal implemented model of inflectional morphology (Parsli). Starting August 2019 she will join George Mason University as an Assistant Professor in Computational Linguistics. Géraldine’s general research interests lie in the fields of morphology and morphosyntax, linguistic typology, and cognitive science. Her work integrates the development and use of computational and quantitative methods. She is interested in the degree of internal cohesion within morphological systems as well as in the role of morphology and morphological units within the broader linguistic system. Her approach is at the same time typological, formal and quantitative. It focuses specifically on system-level patterns of morphological organisation and their consequences for cognitive processing and development, as well as for diachronic change. In collaboration with Benoît Sagot, she has worked on structural complexity measures.

Benoît Sagot is a research fellow at the French national institute for research in computer science, Inria. In 2006, he obtained a PhD in computer science from Université Paris Diderot for his work on formal grammars, parsing algorithms, and the modelling and development of lexica, especially for French. He is now the head of Inria’s ALMAnaCH research team in Paris, a team dedicated to natural language processing (NLP) and computational humanities. His research interests range from NLP to computational linguistics. In NLP, he focuses on formal and neural language modelling, lexical resource development (morphological, syntactic and semantic), as well as tagging and parsing systems and algorithms (with a current focus on neural approaches). He also works on more applicative tasks such as text normalisation, text simplification and text mining. In computational linguistics, he focuses mainly on lexical syntax as well as on quantitative and computational morphology, in collaboration with field linguists and formal morphologists. He puts a special emphasis on the French language, but also develops models, resources, and tools on a large variety of languages, including low-resource ones. -

Adina Williams (Facebook AI Research)

Abstract: Recently, discussions of fairness in NLP have thrust gender biases in systems and datasets onto center stage (Bordia & Bowman 2019, Gonen & Goldberg 2019, Hoyle et al. 2019, Qian et al. 2019, Sun, Gaut et al. 2019, Zhao et al. 2019, Rudinger et al. 2017, i.a.). Much of this important work presupposes a relationship between gender-marking, which is present on all nouns in the lexicon in languages that have grammatical gender, and sociopolitical or cultural gender, which is the expression of gender social norms and stereotypes. Such a presupposition relies on the notion that grammatical gender is meaningful, a position which finds some a priori support from gender-marking on occupation nouns (e.g., the feminine marker -a on doctor-a in Spanish belies the gender of the doctor, and without it, one cannot know the gender of the medical professional). However, it is not clear whether grammatical gender is uniformly meaningful across the entire noun lexicon (Corbett 1991). Put simply, the question remains: does grammatical gender actually tell us about the meaning of inanimate nouns? And furthermore, if it does, just how much can it tell us? To address these questions, we utilize information-theoretic measures to measure just how much gender reveals about inanimate noun meaning, across several gender-marking languages. We uncover a significant, and non-trivial relationship between the meaning of inanimates, under our operationalization, and gender-marking. While this finding is important in itself, it is not fully satisfactory, since we do not know what gender tell us about noun meaning. In short, it is not obvious whether gender-marking on inanimate objects reflects a new kind of meaning, or the same kind of meaning as on animate nouns: does the feminine marker on mes-a “table" reflect cultural gender stereotypes as it does on doctor-a (Boroditsky 2003, Boroditsky et al., 2003). Towards addressing this, we also present an initial attempt to test the hypothesis that gender always contributes stereotypical semantics, which yields equivocal, but promising results.

Adina Williams is a postdoctoral researcher in the Facebook Artificial Intelligence Research (FAIR) Group in New York City. She received her PhD in Linguistics under the supervision of Liina Pylkkänen in 2018 from New York University, where she also contributed to the Machine Learning for Language Laboratory in the Center for Data Science with the support of Sam Bowman. Prior to that, she received an MA in Linguistics from Michigan State University, and two BS, one in Mandarin Chinese and one in Molecular Neuroscience, from the University of Michigan. Her research focuses on cognitive scientific approaches to the study of linguistic meaning, particularly compositional meaning mediated by syntactic and morphological structure. Her recent research topics include the neural basis of argument structure and plurality, understanding inherent biases in deep neural networks, natural language inference (benchmark datasets, cross-lingual NLI, and NLI as an task), and idiosyncrasies in meaning in morphological and grammatical systems across languages. -

Janet Pierrehumbert (Oxford)

Title: Plausible Morphology

Abstract: Productive word-formation patterns are the dominant source of rare and novel words in language. These patterns include inflectional morphology, derivational morphology, and compounding. While computational morphology has made impressive progress, rare and novel words continue to be a bigger challenge for NLP systems than for humans. Thus, it is worth thinking about how current computational algorithms compare to what humans do in acquiring and using a robust and adaptable lexical system. In this talk, I will discuss some characteristics of current algorithms that are cognitively plausible, quite likely being reasons they succeed as well as they do. I will also discuss some problems that must be addressed to create even more plausible algorithms in the future.

Janet B. Pierrehumbert is Professor of Language Modeling in the Department of Engineering Science, University of Oxford. Her MIT Ph.D thesis developed a model of the phonology and phonetics of English intonation. She was a Member of Technical Staff in Linguistics in and Artificial Intelligence at AT&T Bell Labs from 1982 to 1989, where she worked on algorithms for providing natural and expressive intonation in speech synthesis. After moving to Northwestern University in 1989, she turned to experimental and computational investigations of lexical systems. She moved to Oxford in 2015. Her current research focuses on how lexical systems can be acquired from statistical patterns in linguistic experience, how they are deployed in creating and processing new words, and how cognitive and social factors interact to determine lexical patterns in linguistic communities. She teaches the course on statistical NLP for social data scientists in Oxford’s new Social Data Science post-graduate program. Pierrehumbert is a Fellow of the Cognitive Science Society, the Linguistic Society of America, and the American Academy of Arts and Sciences. She was elected as a member of the US National Academy of Sciences in 2019.